Detect Feature Difference with Adversarial Validation

Introduction to Adversarial Validation

Adversarial Validation (AV) is a popular technique often used to reduce over-fitting during training.

The idea is simple, if your training data is identical to the test data, then your model will over-fit as the test set offers no feedback on generalisation. The higher degree the dis-similarity between your train and test data (still from the same generating distribution), the more feedback it can provide and reduce over-fitting.

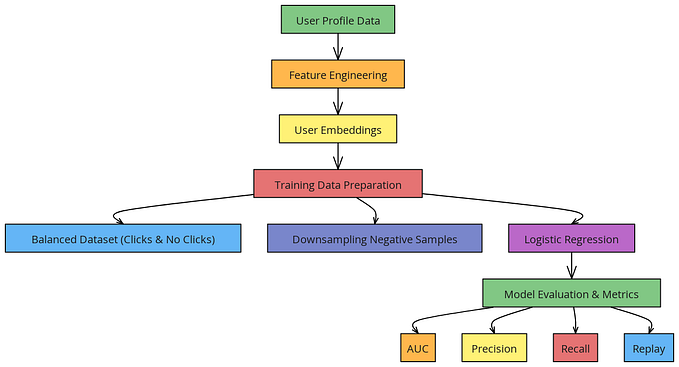

The typical steps to performing adversarial validations are:

- Take a sample from both the training and test dataset

- Add label to the new data sets, if the source is the training data, label it 1 else 0.

- Train a BINARY classifier (e.g. Catboost or Logistic regression) on the new train data set

- Predict on the new test data set and calculate the metrics such as AUC.

The lower the AUC, the higher the similarity between the data and reducing the similarity should improve the robustness of the model.

Example code:

Here are some boiler code to perform Adversarial validation using CatBoost as the discriminator.

from typing import Tuple

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import (

confusion_matrix,

classification_report,

roc_auc_score

)

from catboost import (

Pool,

CatBoostClassifier

)

class CatboostDiscriminator:

"""Perform adversarial validation using CatboostClassifier.

"""

def __init__(self, categorical_features: list, **kwargs):

self.catboost_params = {

'iterations': 300,

'thread_count': 10,

'loss_function': 'Logloss',

'depth': 3,

'learning_rate': 0.5,

'od_type': 'Iter',

'od_wait': 100,

'subsample': 0.5,

}

self.catboost_params.update(kwargs)

self.categorical_features = categorical_features

self.model = CatBoostClassifier(**self.catboost_params)

def _prepare_train_validate_data(

self,

train_x: pd.DataFrame,

train_y: pd.DataFrame) -> Tuple[Pool, Pool]:

"""Split the training data into train and validate so we can utilise

early stopping in training.

"""

train_x, validate_x, train_y, validate_y = train_test_split(train_x, train_y)

train_pool = Pool(train_x, train_y, cat_features=self.categorical_features)

validate_pool = Pool(validate_x, validate_y, cat_features=self.categorical_features)

return train_pool, validate_pool

def fit(self,

train_x: pd.DataFrame,

train_y: pd.DataFrame):

""" Fit the catboost model.

"""

train_pool, validate_pool = self._prepare_train_validate_data(train_x, train_y)

self.model.fit(

train_pool,

eval_set=validate_pool,

silent=True

)

def predict(self, X):

return self.model.predict(X)

def predict_proba(self, X):

return self.model.predict_proba(X)

def get_feature_importance(self):

return self.model.get_feature_importance()

def get_feature_names(self):

return self.model.feature_names_

class AdversarialValidator:

"""Adversarial validation is a technique to compare two different

dataset by fitting and attempting to predict the origin of the

sample.

Two datasets are equivalent if the model has a poor performance

(e.g. 50% accuracy).

"""

def __init__(self,

reference_data: pd.DataFrame,

alternative_data: pd.DataFrame,

train_size: float=0.7,

random_state: int=1):

self.reference_data = reference_data

self.alternative_data = alternative_data

self.train_size = train_size

self.random_state = random_state

def adversarial_sampling(self):

"""Sample the data to create the adversarial training/test

data set.

"""

self.reference_target = pd.DataFrame(np.ones(self.reference_data.shape[0]))

self.alternative_target = pd.DataFrame(np.ones(self.alternative_data.shape[0]))

return train_test_split(

pd.concat([self.reference_data, self.alternative_data]),

pd.concat([self.reference_target, self.alternative_target]),

train_size=self.train_size,

random_state=self.random_state

)

def train_discriminator(self) -> None:

"""split the data using the sampler and fit the discriminator

model specified.

"""

self.train_x, self.test_x, self.train_y, self.test_y = (

self.sample(self.reference_data, self.alternative_data)

)

self.model.fit(self.train_x, self.train_y)

def summary(self) -> None:

"""Binary classification report of the prediction.

"""

self._check_is_fitted()

predicted = self.model.predict(self.test_x)

print('Confusion matrix:\n')

print(confusion_matrix(self.test_y, predicted))

print('Classification report:\n')

print(classification_report(self.test_y, predicted))

def get_auc(self) -> float:

"""Get the AUC

"""

self._check_is_fitted()

predicted_proba = self.model.predict_proba(self.test_x)[:, 1]

return roc_auc_score(self.test_y, predicted_proba)

def get_top_violators(self, n: int=10) -> pd.DataFrame:

"""Get features that are are different between the two datasets.

Features with high feature importance implies their

distribution differes between the two dataset and allows the

model to utilise them.

"""

return pd.DataFrame({

'feature_name': self.model.get_feature_names(),

'importance': self.model.get_feature_importance()

}).sort_values('importance', ascending=False).head(n)Extending the use case

But there is a lot more to reducing over-fitting this technique can offer. In practice, I have found at least two application that have greatly improved my efficiency when working with data.

Last year, we undergone a data pipeline migration where we moved from Airflow to Flyte as our orchestrator. In addition, we offload the computation to Big Query instead of Python whenever possible to reduce cost and improve efficiency.

It was not a small feat to ensure hundreds of features replicated were identical to the existing data pipeline and this is where adversarial validation comes to rescue.

Instead of going through the tedious process of comparing the mean, median and the rest of the 5 number summary for each feature, Adversarial Validation was able to quickly determine whether the two dataset were similar enough based on a predetermined threshold (e.g. AUC=0.55) and since we have fitted a binary classifier, we can calculate the feature importance to determine which feature provided the most “difference” between the two data sets.

Adversarial Validation sped up our data migration by at least 10 fold.

Another practical use case also adopted by Uber was to detect concept drift in the data.

As machine learning systems are alive and constantly evolving, being able to monitor and detect concept drift is critical.

Takeaway

Applied machine learning is basically feature engineering, Adversarial Validation is a useful addition to any data scientist’s toolbox.